Embodied agents must detect and localize objects of interest, e.g. traffic participants for self-driving cars.

Supervision in the form of bounding boxes for this task is extremely expensive.

As such, prior work has looked at unsupervised instance detection and segmentation, but in the absence of annotated boxes, it is unclear how pixels must be grouped into objects and which objects are of interest.

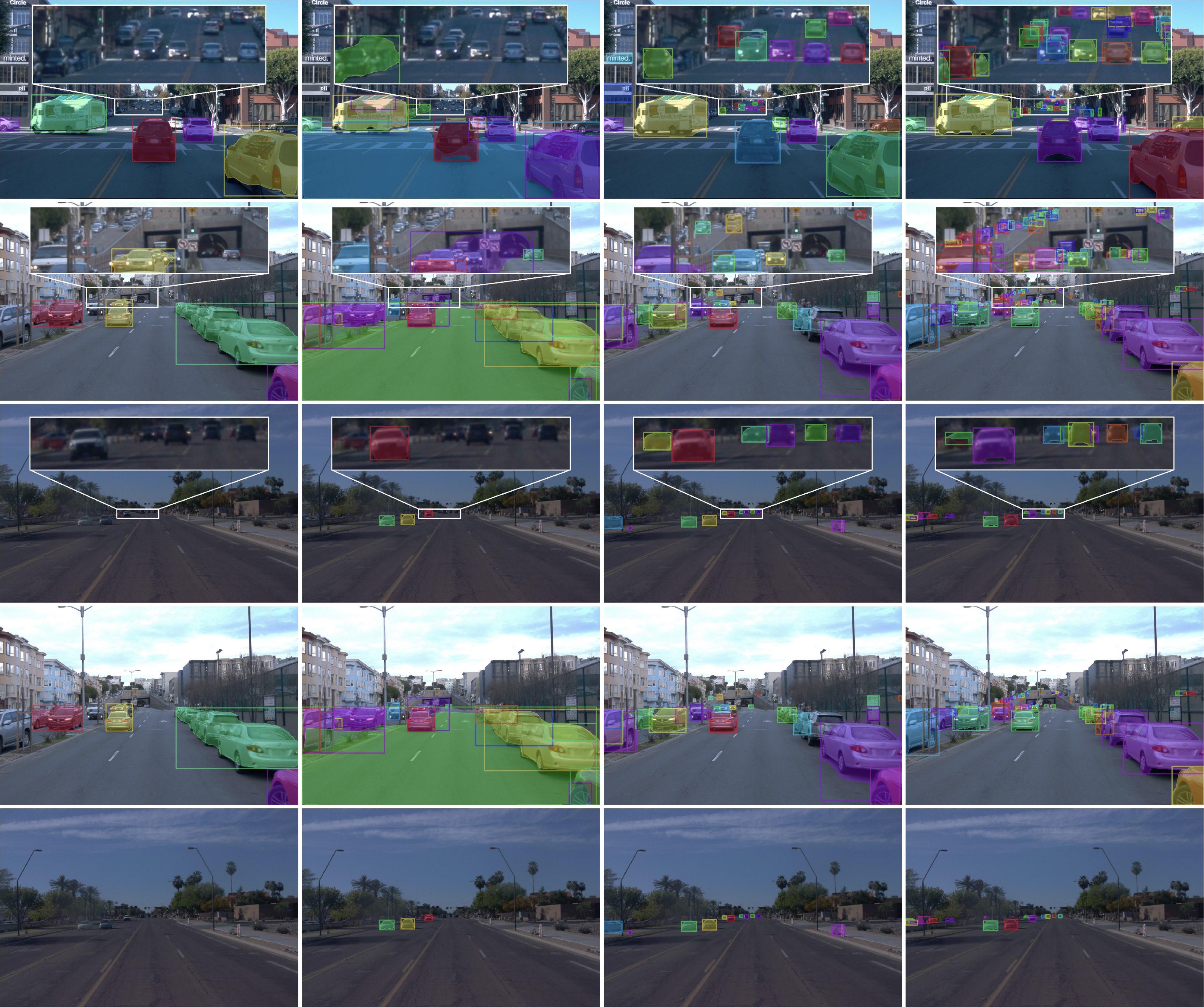

This results in over-/under-segmentation and irrelevant objects.

Inspired by human visual system and practical applications, we posit that the key missing cue for unsupervised detection is motion: objects of interest are typically mobile objects that frequently move and their motions can specify separate instances.

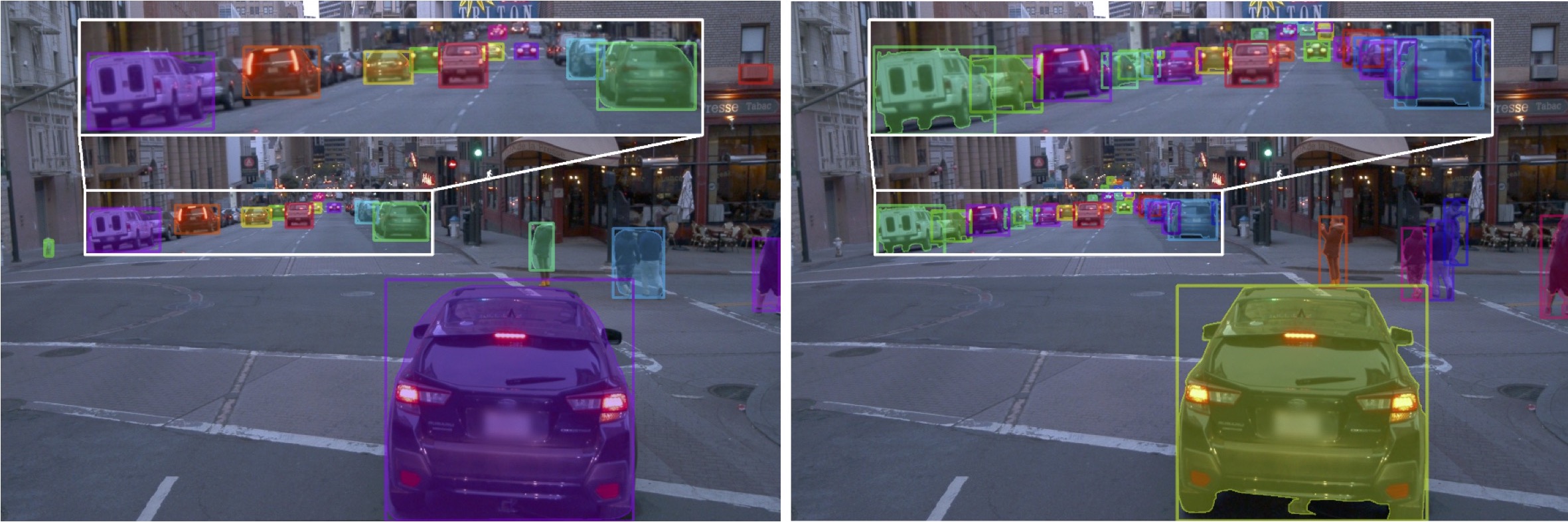

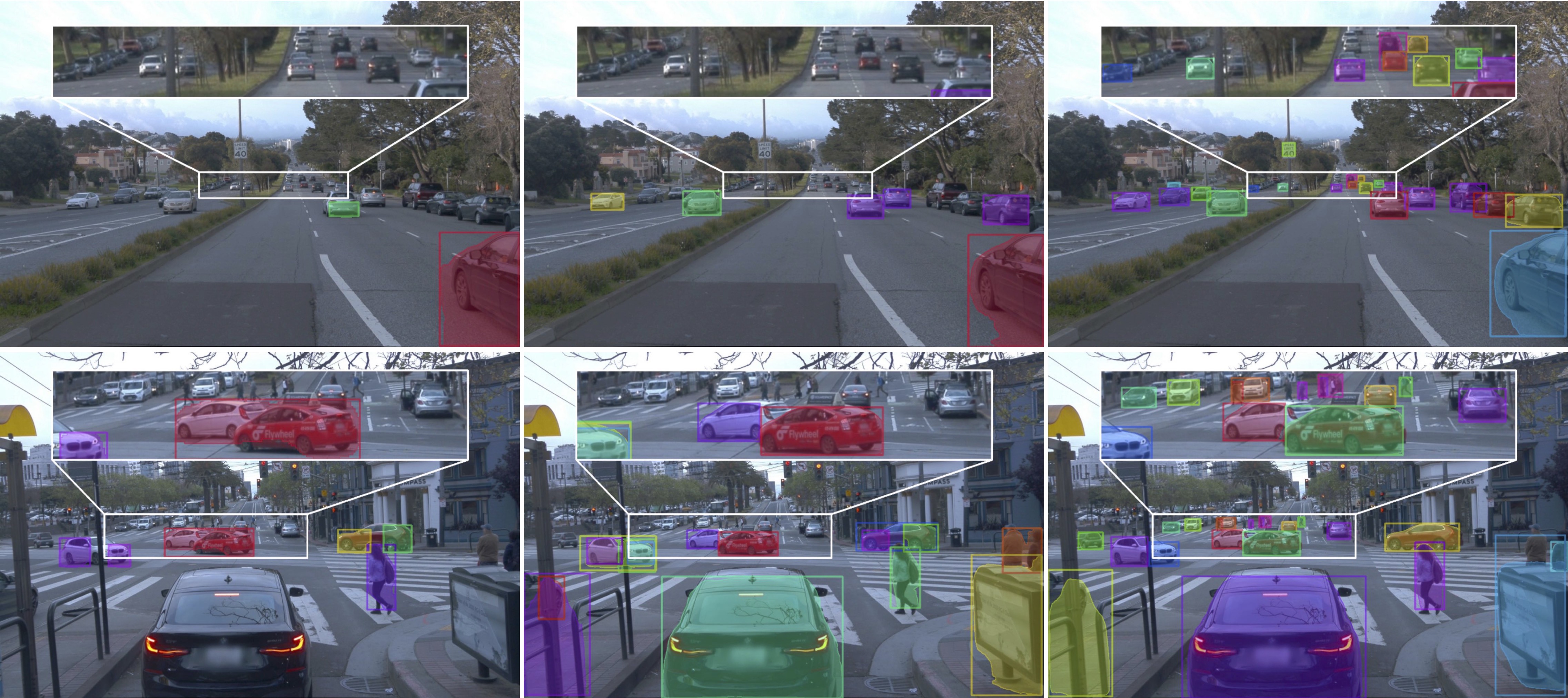

In this paper, we propose MOD-UV, a Mobile Object Detector learned from Unlabeled Videos only. We begin with instance pseudo-labels derived from motion segmentation, but introduce a novel training paradigm to progressively discover small objects and static-but-mobile objects that are missed by motion segmentation.

As a result, though only learned from unlabeled videos, MOD-UV can detect and segment mobile objects from a single static image.

Empirically, we achieve state-of-the-art performance in unsupervised mobile object detection on Waymo Open, nuScenes, and KITTI Datasets without using any external data or supervised models.